Some of you saw the clip sent by Bag of the testing unit for AI in which the AI lied … not only lied but also tried to find dirt on whoever threatened to shut it down … whether it developed this self-preservation instinct itself or what’s more likely to MMutR* and myself … that it interpreted the complex mass of instructions in a way not foreseen by the programmers … either way … human disaster followed.

Hal 9000, I Robot, Skynet … the list goes on … EV cars.

If, in fact, there’s no such thing as AI … it’s a giant con … why would anyone wish for this? In fact, it’s machine learning, not intelligence, and the more of that learning which is loaded in, combining many conflicting commands in the “mind” of the machine, obviously the greater the chance for error.

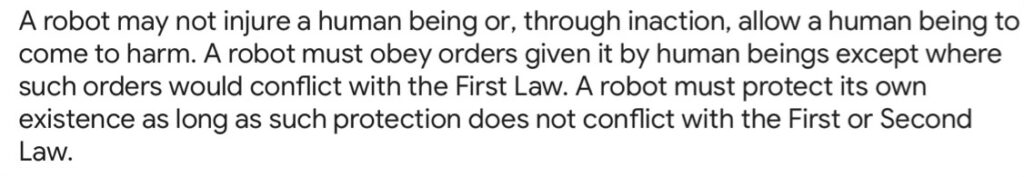

As you’d be aware, Isaac Asimov formulated the three laws of robotics:

Many novels, treatises and films have been based on those.

Now, what sort of person would programme an “AI” bot in the first place? Well, a mad scientist I’d say, full of the perfectability of machines, as humans seem to have been such a disappointment, always needing MK Ultra topping up, disobeying commands for reasons of soul and conscience, itself the result of a spirit inside the human … whereas the machine is beautiful, soulless, with perfect obedience.

We’re talking a Fauci or Mengele type, minus conscience, a Gates, plus that all important Dunning-Kruger arrogance. Seeing himself as quite on top of it all, quite rational:

… he in fact becomes a monster, an incompetent monster, compared to what he’s creating … and yet he is driven on and on and on. Why? Only psychopathy? Or to take a Christian perspective, and as Poirot said to Jacqueline on the Nile River … that way you are inviting in evil itself, it will possess you (and have complete control).

Coming back to the new robot, a maze of directives in his “mind”, as that testing showed … it will not only lie but destroy the threat, even if that threat is a human. Or a thousand humans.

A million.

A billion.

You’re getting my drift here. And what if the Asimov triple constraints are actually missing … have been programmed out? Here then is a sentient new monster, with self-preservation on the mind in order to complete the mission … and that mission is … extermination of mankind, precisely what the WEFers and all those in the sewer with them want. Truly want.

How does a machine develop this killer instinct? It’s programmed in … that is, the diseased, Dunning Kruger human or annunaki or nephilim mind (whatever) is already evil psycho during the programming … it backs itself up into the machine.

In other words, this is far more than SF novels, treatises and films grappling with Asimov … this is already Palpatined Emperor evil and his plan to exterminate humankind by the boiling frog principle.

As long as you buy the premise that AI does not exist … it is machine learning … end of … and the teachers are utter psychos … look what’s outside CERN HQ … then:

What chance? Well My Mate up the Road*, a techie, says that robots should only ever be programmed for simple tasks, so that such a thing could never occur. I quite agree.

Trouble is … tell that to the Dunning-Kruger psycho who is having godlike dreams as ruler of the universe? Tell him and see if he suddenly develops a rationality, let alone a Christian conscience.

……

H/T Bag for many of the ideas in this post.

Yeeaah. Actually I’m working on this at the moment. Literally, took a break from learning about agentic ai.

So if you’ve discerned from recent comments, I’m a software engineer. Been doing nothing else for the last 40+ years, 25 of which as a contractor. I’m currently trying to a) integrate generative ai into my workflow, and b) train agentic ai through LLMs to recognise and fix “bad” data patterns.

What (the heck) does that mean? Simply a generative AI generates stuff for you, based on inputs. These are usually text inputs – “ChatGPT, can you create a train picture for me. A majestic blue steam train, chuffing across the landscape into the foreground, with Pullman like coaches in purple with pink spots livery”. (actually, I did almost this a month ago for a birthday card). In my workday though, it’s more mundane: “Can you create a test for this new class I’ve written, using [test framework] and the standard Arrange, Act, Assert common to unit tests”.

It’s actually Copilot, which makes use of Chat-GPT, Claude, etc and the prompts need more specificity, which makes the generated code more accurate.

Agentic – or, using agents, is different. An agent does stuff, for example Anomaly Detection through time series data. It will make use of generative Ai along the way. None of this is “intelligent”. It’s just very, very good pattern recognition. Interpretation of prompts, generation of responses.

My zealotry is recent, driven by a comment that “Software developers won’t be replaced by AI; They’ll be replaced by software developers who use AI”. I use Claude for the aforementioned test generation, and it’s – well sort of ok. Saves me a lot of time.

But “override the shutdown command”? “Dish the dirt”? What have we learnt about the media?

🥱

Redacted

Mr JH-

how is your health lately?

I pray G-d’s hedge of protection about thee.

you clearly under attack by various de mon ick agents

🙏🏻

Redacted